On Sept. 18, the House Energy and Commerce Committee is marking up 16 pieces of legislation including the so-called Kids Online Safety Act and Children and Teens’ Online Privacy Protection Act (COPPA), both of which would dramatically erode liability protections that websites and applications currently enjoy under existing law including Section 230 of the Communications Decency Act.

For example, in Section 110 of the Kids Online Safety Act, civil actions may be brought by states against “public-facing website, online service, online application, or mobile application that predominantly provides a community forum for user-generated content” including perceived “harms” allegedly inflicted upon users including minors.

Actions by every single state in the country against websites and other applications would bring a new level of regulation to the internet never seen before: “In any case in which the attorney general of a State has reason to believe that a covered platform has violated or is violating section 103, 104, or 105, the State, as parens patriae, may bring a civil action on behalf of the residents of the State in a district court of the United States or a State court of appropriate jurisdiction…”

The bill also authorizes guidances and other regulations by the Federal Trade Commission against all websites and applications subject to the bill that would require content filtration on behalf of minors and other highly expensive mechanisms that could harm small businesses from functioning on the internet at all and otherwise would raise serious First Amendment concerns because it would fundamentally alter the content being published on these websites and applications.

All of these provisions would directly undermine core protections of websites and other applications that were enacted by Congress in 1996, including Section 230 of the Communications Decency Act.

47 U.S.C. Section 230(c)(1) forms part the internet’s liability shield, stating, “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.”

Subsections (c)(2)(a) and (c)(2)(b) form the other part of that protection, stating, “No provider or user of an interactive computer service shall be held liable on account of any action voluntarily taken in good faith to restrict access to or availability of material that the provider or user considers to be obscene, lewd, lascivious, filthy, excessively violent, harassing, or otherwise objectionable, whether or not such material is constitutionally protected any action taken to enable or make available to information content providers or others the technical means to restrict access to material described…”

These simultaneously grant a broad liability exemption for websites and other interactive computer services from whatever users happen to post on their websites, and grants the companies power to remove items at their discretion they find objectionable. The delicate balance this creates shields websites and other applications from lawsuits, allows for user networks to exist and otherwise protects the First Amendment rights of those websites by giving them the final say over what goes on their platforms.

Removing the liability protections, as the Kids Online Safety Act and COPPA envision by creating clear exceptions to section 230, would subject almost every website and interactive computer service to liability from the millions of users on these websites — on purpose.

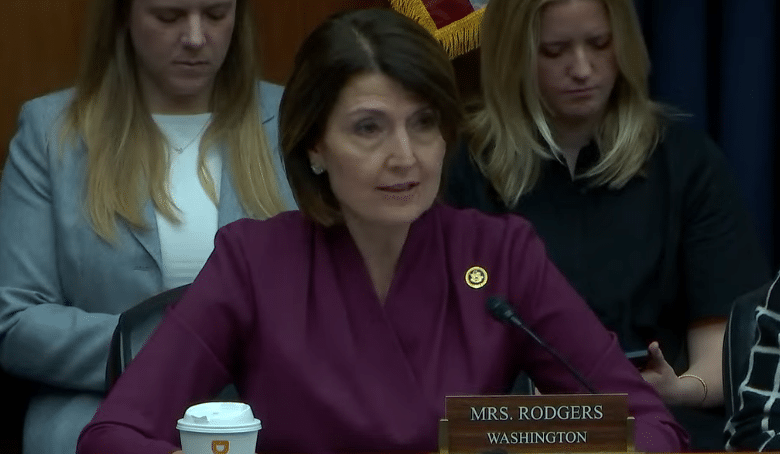

The intent behind eroding these protections was made clear at a hearing on May 22 entitled, “Legislative Proposal to Sunset Section 230 of the Communications Decency Act” to discuss proposed draft legislation by House Energy and Commerce Committee Chair Cathy McMorris Rodgers (R-Wash.) and Ranking Member Frank Pallone, Jr. (D-N.J.), the “Section 230 Sunset Act,” which would end Section 230 of the Communications Decency Act protections on Dec. 31, 2025 in order to force Big Tech companies to negotiate directly with Congress.

In a May 12 oped in the Wall Street Journal House Energy and Commerce Committee Chair Cathy McMorris Rodgers (R-Wash.) and Ranking Member Frank Pallone, Jr. (D-N.J.) outlined the blackmail provision, stating, “It would require Big Tech and others to work with Congress over 18 months to evaluate and enact a new legal framework that will allow for free speech and innovation while also encouraging these companies to be good stewards of their platforms. Our bill gives Big Tech a choice: Work with Congress to ensure the internet is a safe, healthy place for good, or lose Section 230 protections entirely.”

And the goal is censorship. Chairing the May 22 subcommittee hearing was U.S. Rep. Bob Latta (R-Ohio) who promised in his opening statement that Congress is going to “reform [Section 230] in a way that will protect innovation and promote free speech and allow Big Tech to moderate indecent and illegal content on its platforms and be accountable to the American people.”

The subcommittee’s ranking member, U.S. Rep. Doris Matsui (D-Calif.) who blasted Section 230, saying Congress needed to address “disinformation” allowed on websites: “The broad shield [Section 230] offers can serve as a haven for harmful content, disinformation and online harassment.” She included so-called “election disinformation” in that category.

So, right off the bat, the goal of sunsetting Section 230—the legislation thankfully went nowhere—was to force a discussion about other legislation that would regulate “indecent” content or “disinformation”.

The Kids Online Safety Act and COPPA are that other anticipated legislation. And it’s already passed the Senate overwhelmingly, 91 to 3, making the House the last stopgap to protect the free and open internet. Despite massive efforts to conduct oversight on the executive branch whose actions resulted in censorship, Congress is pushing yet new measures to expand the scope of censorship to include the Federal Trade Commission and all 50 states to boot.

It would break the internet — on purpose — since the only platforms that could afford to assume the risk of hosting somebody else’s material that the government might deem “harmful” will be those with teams of lawyers and hundreds of billions of dollars of market cap that will get to define the regulations to exclude smaller and emerging market actors that will not be able to afford to filter content. That will limit the creation of new platforms, raising the question, does Congress even want a free and open internet?

Robert Romano is the Vice President of Public Policy at Americans for Limited Government.